Research Interests

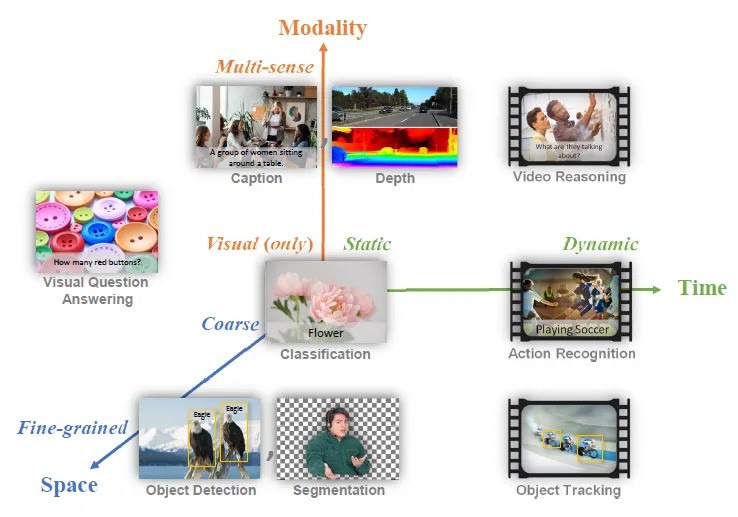

Foundation Models

My research explores parameter-efficient fine-tuning techniques for large models, retrieval-augmented generation, in-context learning, and chain-of-thought reasoning. I'm particularly interested in vision-language models like CLIP and advancements in diffusion models and transformers for various applications.

Navigation & Localization

I develop neural inertial navigation systems, visual-inertial odometry frameworks, and end-to-end SLAM solutions. My work focuses on multi-modal sensor fusion for robust localization in challenging environments with applications in deep reinforcement learning and self-supervised learning paradigms.

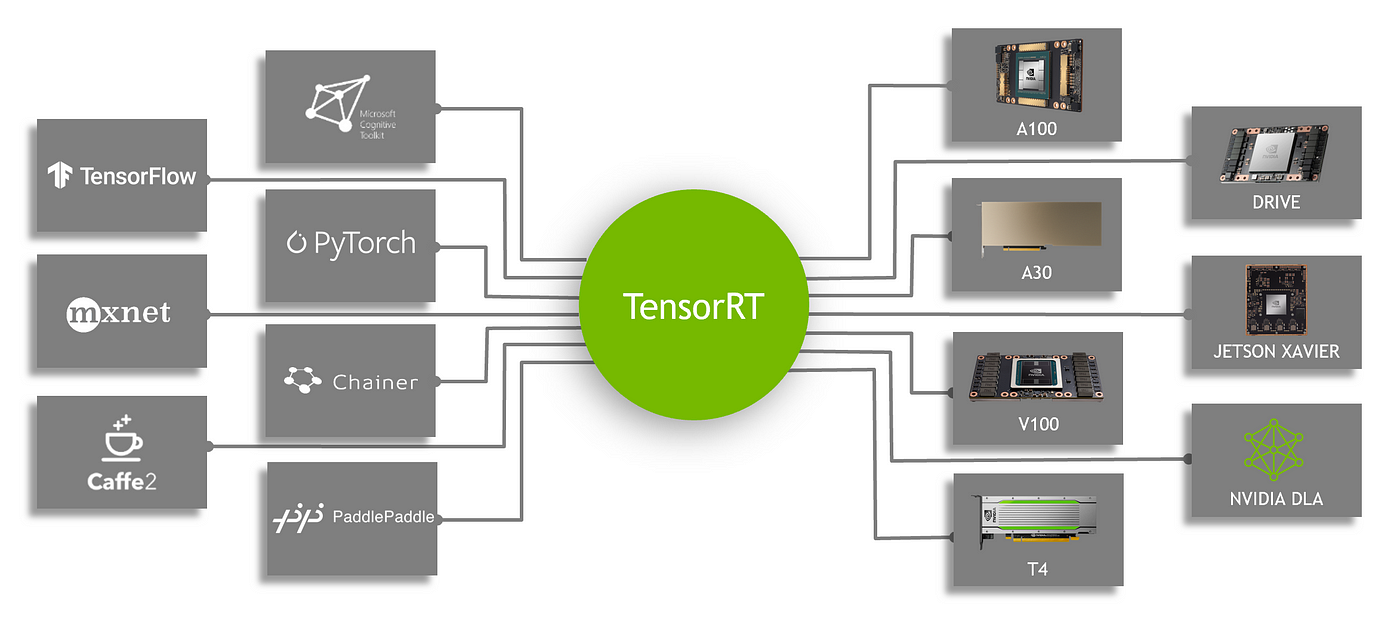

Hardware-Aware AI

I work on neural architecture search, quantization techniques, and knowledge distillation to create efficient AI models. My research incorporates hardware-aware optimization, adversarial robustness considerations, differential privacy, and approaches for federated and distributed learning with FPGA/SoC acceleration.

Embodied AI

My research investigates vision-language-action models like PaLM-E, multimodal perception for robotic control, and sim-to-real transfer techniques to bridge virtual and physical environments for embodied AI agents.

Zero-Shot and Few-Shot Learning

I explore meta-learning approaches, prompt engineering techniques, cross-modal transfer methods, and low-resource adaptation strategies to enable AI systems to perform effectively with minimal training examples.

Current Projects

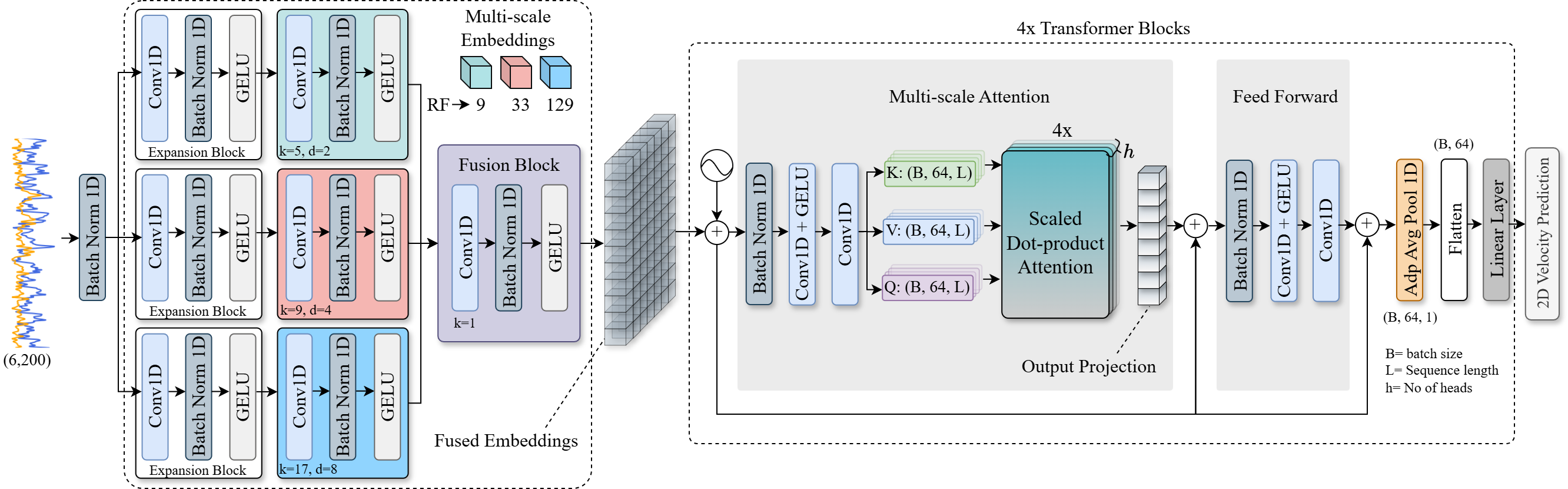

NanoMST: Ultra-Lightweight Multiscale Transformer for TinyML

Designing a hardware-aware multiscale transformer network optimized for TinyML applications, specifically targeting inertial motion tracking on resource-constrained devices. This project focuses on maintaining high accuracy while drastically reducing model size and computational requirements.

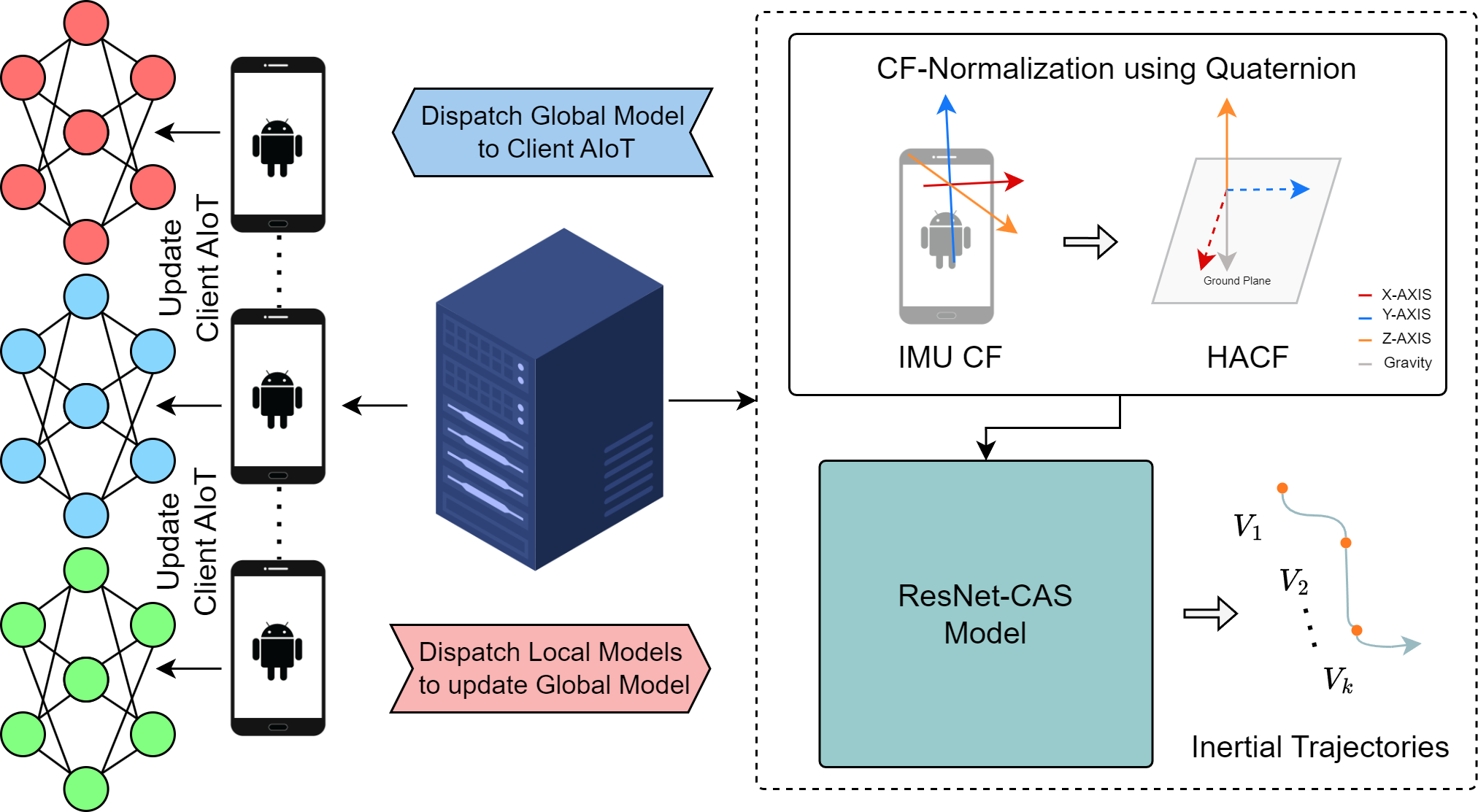

DeepILS: Domain-Invariant Inertial Localization System

Developing an AIoT-enabled inertial localization system that maintains accuracy across varied environments without requiring environment-specific training. This work combines advanced deep learning techniques with domain adaptation to create robust positioning solutions.

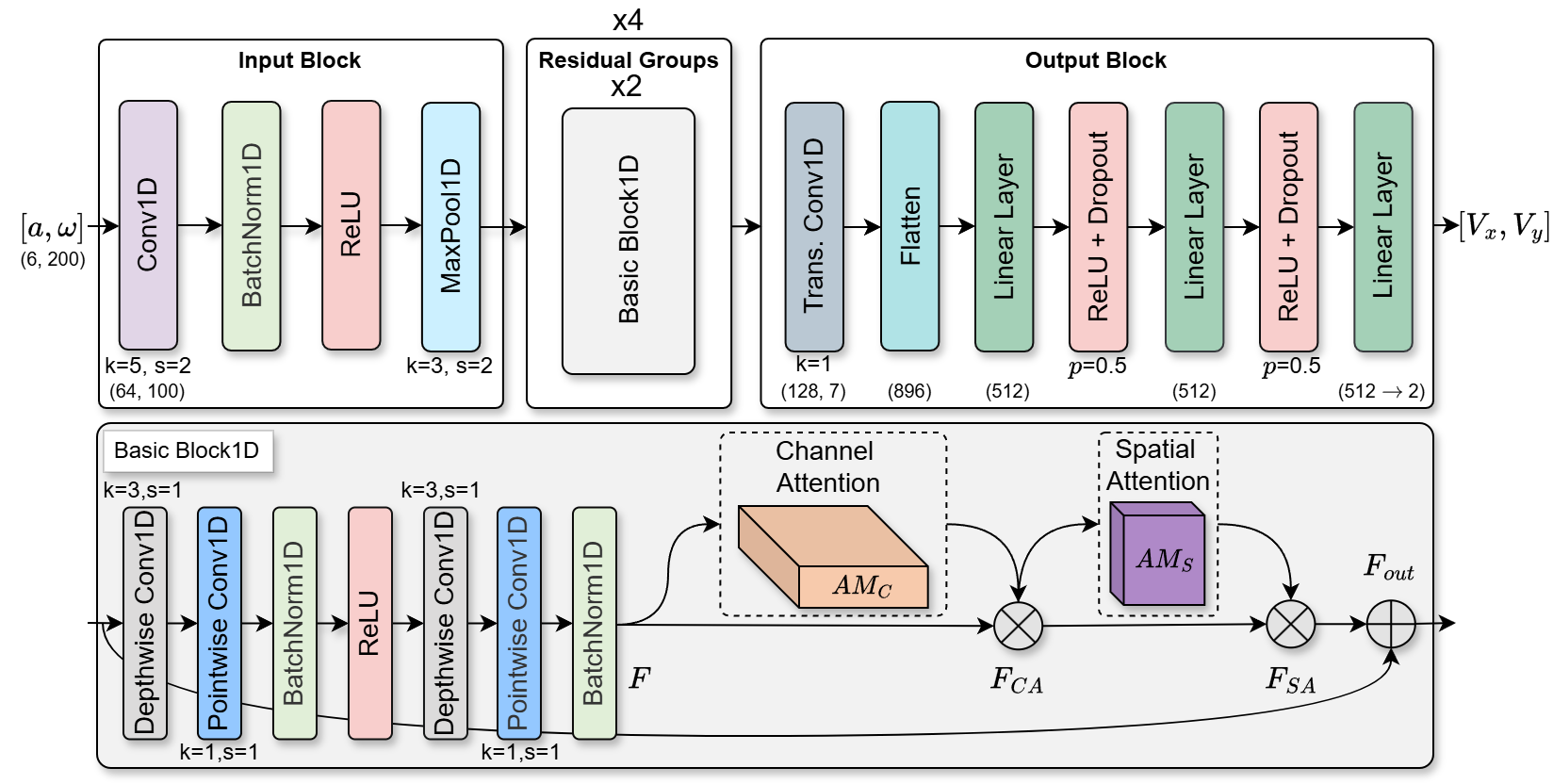

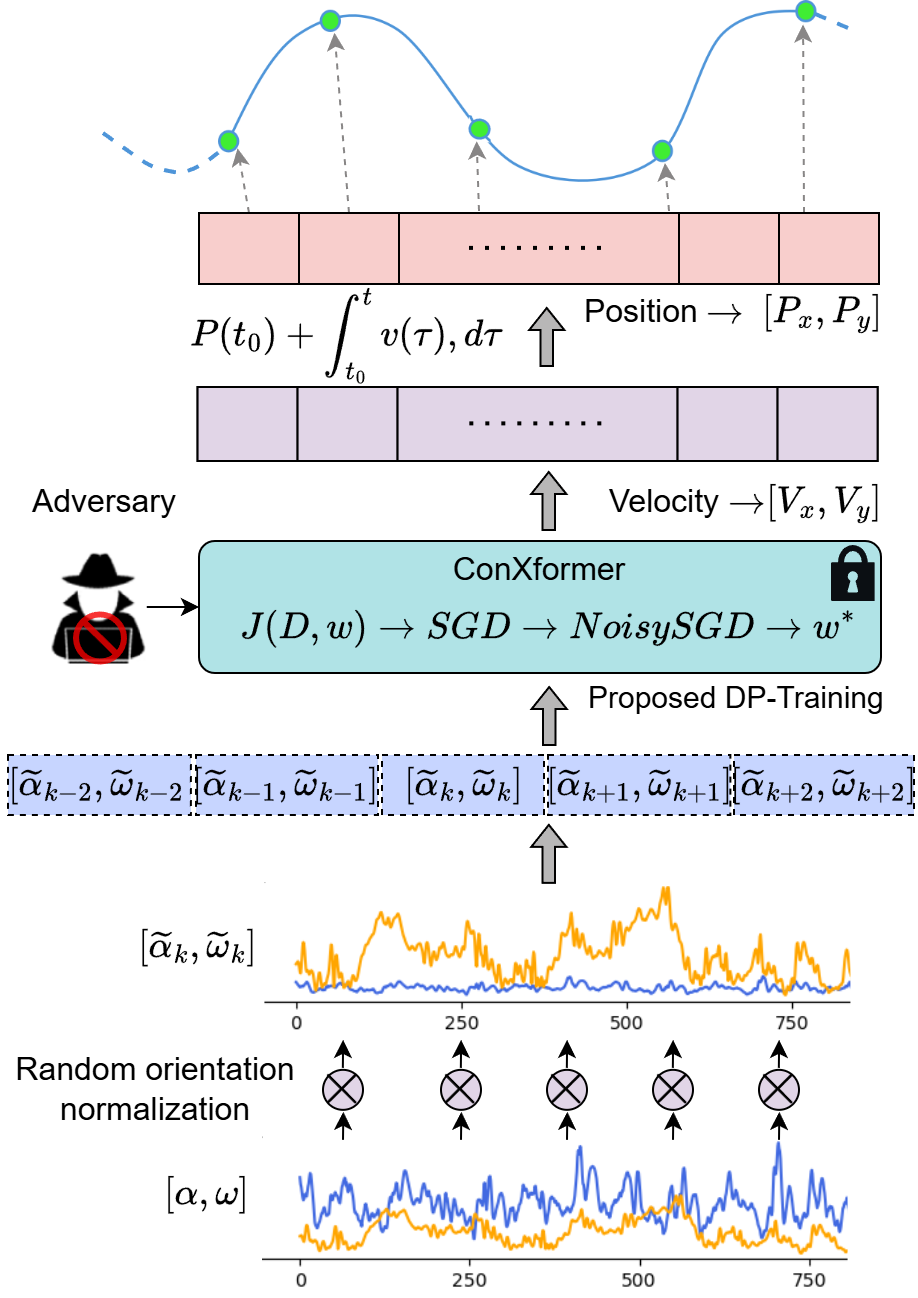

ConvXformer: Privacy-Preserving Hybrid Architecture

Creating a differentially private hybrid ConvNeXt-Transformer architecture for inertial navigation that balances accuracy with strong privacy guarantees. This work addresses the critical need for privacy-preserving AI in navigation applications.

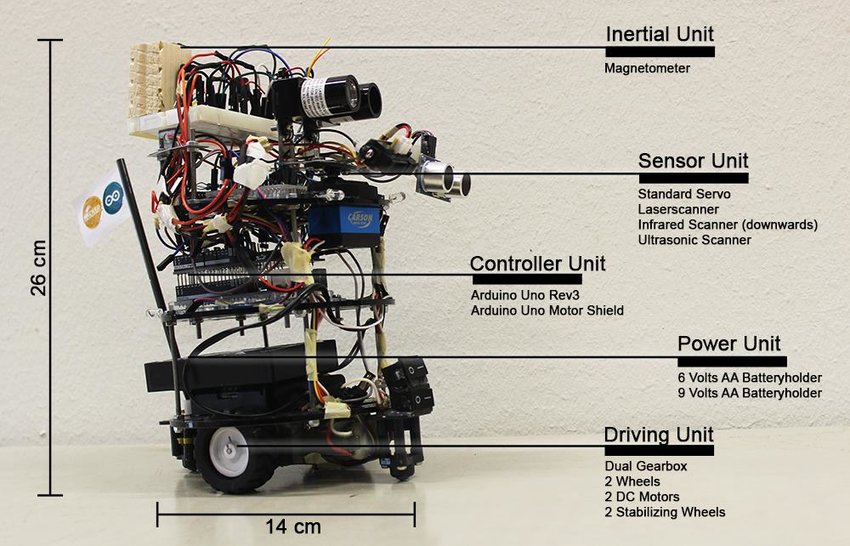

Multi-Sensor Fusion for Autonomous Mobile Robots

I have developed strong expertise in multi-sensor fusion SLAM (Simultaneous Localization and Mapping), integrating data from LiDAR, cameras, IMUs, GPS, and ultrasonic sensors to achieve accurate and robust localization and mapping. My skillset includes sensor calibration, time synchronization, data association, and probabilistic state estimation using techniques such as Extended Kalman Filters, particle filters, and graph-based optimization. I am proficient in point cloud processing, visual-inertial odometry, and sensor noise modeling, with practical experience in algorithm development using ROS, C++, Python, and MATLAB. Additionally, I have a solid understanding of perception pipelines, Bayesian inference methods, SLAM back-end optimization strategies including pose graph and factor graph techniques, and real-time system integration for multi-modal sensing environments.

Personalized Federated Learning for Neural Inertial Localization

Implementing a model-agnostic meta-learning approach to personalize federated learning models for inertial localization, enabling quick adaptation to individual movement patterns and device characteristics while maintaining privacy.